By Paulo Freitas de Araujo Filho

This is the fourth blog post of our series “Empowering Intrusion Detection Systems with Machine Learning”, in which we discuss the use of machine learning in intrusion detection systems (IDSs). In our previous post, we discussed traditional one-class novelty detection algorithms that are trained with data samples from only a single class and detect anomalies by measuring deviations from that class. Now, we go a bit further by focusing on a deep learning structure called autoencoder that can also be used as a one-class novelty detection method for IDSs.

One-class novelty detection algorithms are particularly interesting when it is difficult (or expensive) to have labeled data examples from all classes. For instance, while it may be easy to have a lot of benign data from networks and systems, it is usually very difficult and expensive to have data examples from malicious activities. However, traditional one-class algorithms, such as one-class support vector machine (OCSVM) [1] and isolation forest [2], require a lot of effort for selecting and extracting features. Deep learning-based techniques, on the other hand, overcome this limitation by automatically selecting and extracting the features that grant the best results [3], [4].

Autoencoders

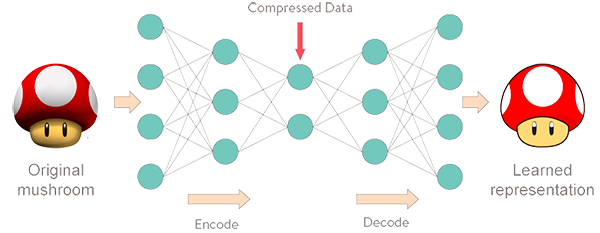

Autoencoders are deep learning structures that were originally developed for compressing data [4]-[7]. They consist of a neural network that can be divided into two parts: encoder and decoder. The encoder comprehends the first neural network layers, which have the goal of reducing the data dimensionality. That is, it encodes input data into data representations with smaller dimensions. For instance, an image that has dimensions 128×128 may be encoded into a compressed representation of it with dimensions 32×32. Similarly, any generic vector, which may contain data from networks and systems, for example, can be compressed with an autoencoder’s encoder.

On the other hand, the decoder part of the autoencoder does the opposite task. It reconstructs the original input data from its compressed representation. Hence, the autoencoder encodes data patterns into lower-dimensional representations and then reconstructs them so that they have the same original input dimension and are as similar as possible to the input [5]-[7]. However, as one can expect, there might be some difference between the original input data and its reconstruction, which is then called reconstruction error [7]-[9]. Figure 1 illustrates an autoencoder structure that encodes and decodes mushroom images.

Figure 2 shows typical reconstructions visualization.

Ok, sounds interesting. But how such an autoencoder structure may be used to detect intrusions? It all comes down to the training data. Let’s take the example of Figure 1, in which the autoencoder was trained with mushroom images. If we use the autoencoder to reconstruct a mushroom image, even if it is an unseen and unknown image, the reconstruction error will be small as that autoencoder has learnt how mushrooms look like. However, if we give a dog image to the autoencoder, the autoencoder will have a larger reconstruction error as it has not been trained with dog images, i.e., it does not know how to compress and reconstruct dog images. Thus, following that same idea, if the autoencoder is trained with only benign data from networks and systems and is later put to reconstruct the incoming data samples, it will produce small reconstruction errors for the samples that are benign and large reconstruction errors for the samples that are malicious. Several research works have been exploiting such a strategy to detect cyber-attacks [8]-[11].

Variational Autoencoders

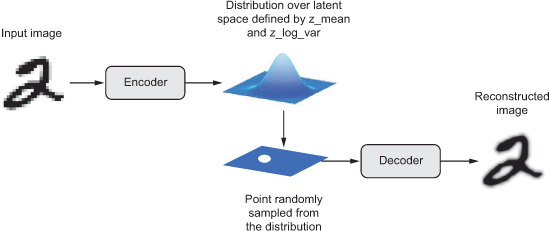

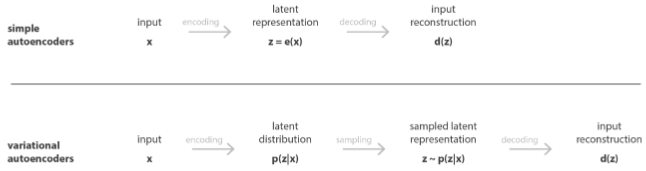

There are also several variations of the autoencoder structure that improve autoencoders in different ways. Among them, variational autoencoders (VAE) enhance autoencoders by modeling data with statistical distributions. While traditional autoencoders construct a fixed representation of data samples that maps them into lower-dimensional or compressed versions of themselves, VAE models the lower-dimensional space with compressed data samples with a statistical distribution that has a mean μ and a standard deviation σ parameter [5], [12], [13]. That is, instead of simply mapping data samples into compressed patterns with the autoencoder’s encoder, VAE finds what data distribution can represent all compressed data. This property allows us to draw random vectors from that distribution, regardless of what input would produce them, and use the VAE’s decoder to produce new data samples. Hence, VAEs not only reconstruct data samples but also have the ability to generate new samples [5], [12], [13].

Similarly to when using traditional autoencoders, VAEs can be trained with only benign data from networks and systems so that they learn the normal patterns and detect malicious samples by computing the reconstruction error of incoming samples. Small errors indicate that the evaluated samples belong to the same category of the training data, i.e., bening data. Large reconstruction errors, on the other hand, indicate that the evaluated samples do not belong to the training data category, i.e., they are anomalies that may be the result of malicious activities [7]. Recent studies have shown that VAE outperforms traditional autoencoders at detecting cyber-attacks [12], [13]. Figure 3 shows the VAE structure and Figure 4 compares it to the autoencoder’s structure.

Deployment

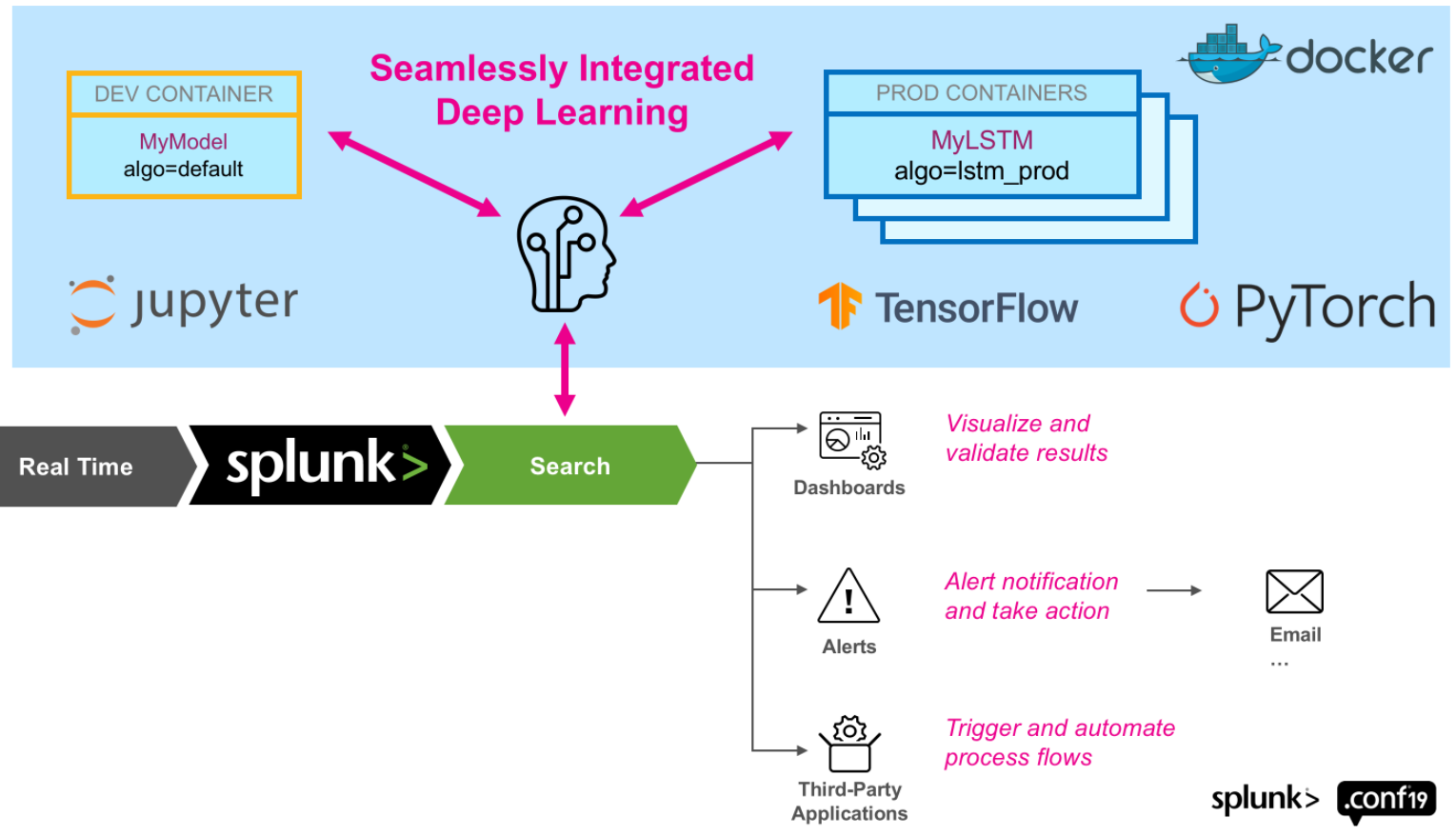

Splunk, which is one of the most used security information and event management (SIEM) platforms, gathers, manages, and correlates data, and deploys cyber-attack detection rules. Its deep learning toolkit (DLTK) offers great flexibility by integrating Splunk with a container so that it is possible to implement deep learning algorithms, such as autoencoders and VAEs, train them, and deploy the trained models as they were traditional cyber-attack detection rules. Figure 5 illustrates the integration between Splunk and a docker environment with support for the most common deep learning frameworks, TensorFlow and Pytorch. Please refer to [14] and [15] for more information.

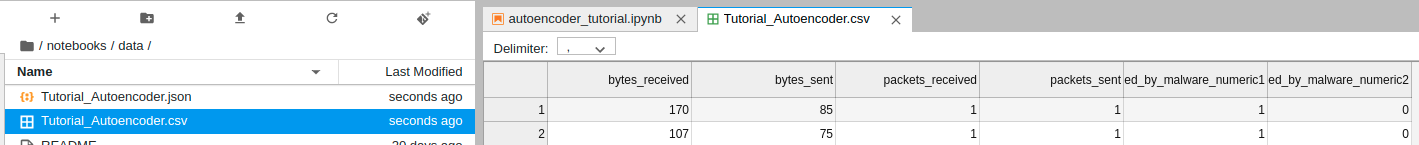

Hence, autoencoder and VAE can be implemented and deployed using Splunk’s DLTK so that they are trained with only benign data from, for example, firewall logs. Then, the trained models are configured as rules to alert as suspicious any incoming firewall log that produces reconstruction errors that are higher than a threshold. Figure 6 shows a sample of firewall log data.

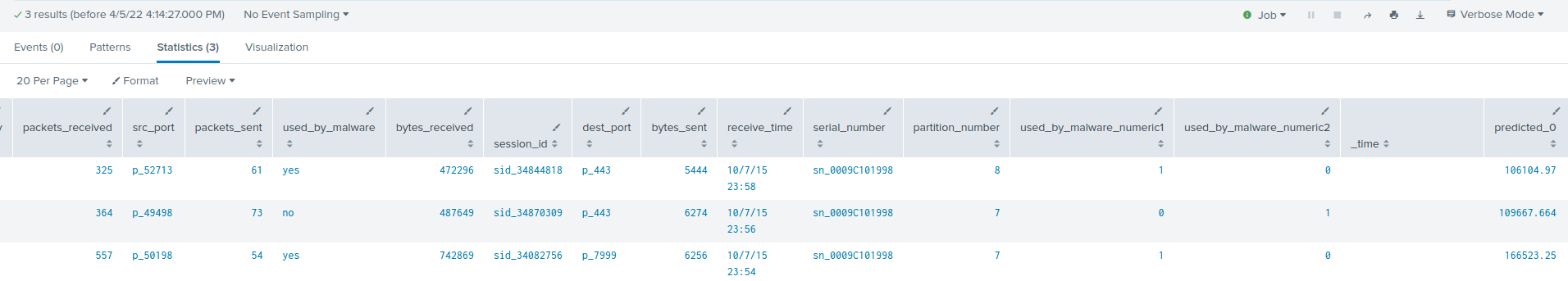

Figure 7 shows three firewall log data that are detected as suspicious by an autoencoder due to their high reconstruction errors, which are depicted in the last column of Figure 7 as “predicted_0”.

Challenges and Drawbacks

Although IDSs based on autoencoders and VAE have been showing very promising results at the detection of cyber-attacks, they require that only bening data samples are used in training. Thus, as traditional one-class novelty detection algorithms, such IDSs must consider clustering or other techniques to first identify and ensure that only bening samples are used in training. Otherwise, the trained model could be misled to believe that malicious samples contaminating the training samples are benign.

Conclusion

In this post, we discussed IDSs that use autoencoders to detect anomalies by computing reconstruction errors. Precisely, large reconstruction errors represent non-conformities with the bening training data so that they indicate malicious activities. At Tempest, we are investigating and relying on such techniques to better protect our customers! Stay tuned for our next post!

References

[1] V. Chandola, A. Banerjee, and V. Kumar, ‘‘Anomaly detection: A survey,’’ ACM Comput. Surv., vol. 41, no. 3, p. 15, 2009

[2] F. T. Liu, K. M. Ting, and Z.-H. Zhou, ‘‘Isolation-based anomaly detection,’’ ACM Trans. Knowl. Discovery Data, vol. 6, no. 1, pp. 3:1–3:39, Mar. 2012.

[3] P. Freitas de Araujo-Filho, G. Kaddoum, D. R. Campelo, A. Gondim Santos, D. Macêdo and C. Zanchettin, “Intrusion Detection for Cyber–Physical Systems Using Generative Adversarial Networks in Fog Environment,” in IEEE Internet of Things J., vol. 8, no. 8, pp. 6247-6256, 15 April15, 2021, doi: 10.1109/JIOT.2020.3024800.

[4] J. Schmidhuber, “Deep learning in neural networks: An overview,” Neural Netw., vol. 61, pp. 85–117, 2015.

[5] B. Pesquet. Autoencoders. Accessed: Apr. 22, 2022. [Online]. Available: https://www.bpesquet.fr/mlhandbook/algorithms/autoencoders.html

[6] D. Dataman. Anomaly Detection with Autoencoders Made Easy. Accessed: Apr. 22, 2022. [Online]. Available: https://towardsdatascience.com/anomaly-detection-with-autoencoder-b4cdce4866a6

[7] R. Khandelwal. Anomaly Detection using Autoencoders. Accessed: Apr. 22, 2022. [Online]. Available: https://towardsdatascience.com/anomaly-detection-using-autoencoders-5b032178a1ea

[8] M. Gharib, B. Mohammadi, S. Hejareh Dastgerdi, and M. Sabokrou, ‘‘AutoIDS: Auto-encoder based method for intrusion detection system,’’ 2019, arXiv:1911.03306. [Online]. Available: http://arxiv.org/abs/1911.03306

[9] Yisroel Mirsky, Tomer Doitshman, Yuval Elovici, and Asaf Shabtai. Kitsune: an ensemble of autoencoders for online network intrusion detection. arXiv preprint arXiv:1802.09089, 2018.

[10] F. Farahnakian and J. Heikkonen, “A deep auto-encoder based approach for intrusion detection system,” 2018 20th International Conference on Advanced Communication Technology (ICACT), 2018, pp. 178-183, doi: 10.23919/ICACT.2018.8323688.

[11] R. Zhao et al., “An Efficient Intrusion Detection Method Based on Dynamic Autoencoder,” in IEEE Wireless Communications Letters, vol. 10, no. 8, pp. 1707-1711, Aug. 2021, doi: 10.1109/LWC.2021.3077946.

[12] J. Rocca. Understanding Variational Autoencoders (VAEs). Accessed: Apr. 22, 2022. [Online]. Available: https://towardsdatascience.com/understanding-variational-autoencoders-vaes-f70510919f73

[13] S. Zavrak and M. İskefiyeli, “Anomaly-Based Intrusion Detection From Network Flow Features Using Variational Autoencoder,” in IEEE Access, vol. 8, pp. 108346-108358, 2020, doi: 10.1109/ACCESS.2020.3001350.

[14] D. Federschmidt, P. Salm, L. Utz, G. Ainslie-Malik, P. Drieger, A. Tellez, P. Brunel, R. Fujara, “Splunk App for Data Science and Deep Learning (DLTK)”, Accessed: Jun. 23, 2022. [Online]. Available: https://splunkbase.splunk.com/app/4607/#/details

[15] D. Lambrou, “Splunk with the Power of Deep Learning Analytics and GPU Acceleration”, Accessed: Jun. 23, 2022. [Online]. Available: https://www.splunk.com/en_us/blog/tips-and-tricks/splunk-with-the-power-of-deep-learning-analytics-and-gpu-acceleration.html

Other articles in this series

Empowering Intrusion Detection Systems with Machine Learning

Part 1 of 5: Signature vs. Anomaly-Based Intrusion Detection Systems

Part 2 of 5: Clustering-Based Unsupervised Intrusion Detection Systems

Part 3 of 5: One-Class Novelty Detection Intrusion Detection Systems

Part 4 of 5: Intrusion Detection using Autoencoders

Part 5 of 5: Intrusion Detection using Generative Adversarial Networks