Adversarial Attacks on the Physical Layer

This is the second blog post of our series “Threats to Machine Learning-Based Systems”, in which we discuss the risks and vulnerabilities introduced by the use of machine learning. As presented in our previous post, adversarial attacks introduce imperceptible perturbations that compromise the results of machine learning models, and thus potentially compromise the functioning of systems that rely on them, such as intrusion detection systems and facial recognition systems [1], [2]. In this post, we discuss how adversarial attacks affect the physical layer of the OSI model and may potentially shut down wireless communications, such as 5G, by focusing on a modulation classification application [3]. In the following posts, we cover adversarial attacks on the network and application layers of the OSI model, and discuss possible defense mechanisms against adversarial attacks.

Machine learning in wireless communications and modulation classification

While most of us are familiar with machine learning applications that are related to image classification, such as facial recognition and obstacle detection, machine learning has been largely adopted in many other fields due to its powerful classification capabilities. Among them, machine learning has been particularly useful to several wireless communications applications, such as classifying modulation schemes.

In wireless communications, wireless devices communicate with each other through the transmission of electromagnetic waves. To transmit data signals in electromagnetic waves, wireless transmitters, which are embedded in any wireless device, need to modulate signals, i.e., encode them in a particular manner that allows them to be transmitted. Similarly, wireless receivers, which are also embedded in wireless devices, demodulate signals, i.e., decode the received data so that it can be understood and used. Although there are many different modulation schemes, signals must always be modulated and demodulated using the same scheme because the demodulation must undo the modulation operation [4], [5]. In a similar manner, imagine that the modulation operation corresponds to a person speaking in English. The audience, i.e., the people that receive a spoken message must also speak English otherwise they will not be able to understand it. Figure 1 shows a signal before it is modulated, after its modulation, and after its demodulation.

However, while the internet of things (IoT) and 5G allow the widespread adoption of connected devices, such as home appliances and vehicles, dynamically changing the modulation scheme used contributes to better organizing the transmission of electromagnetic waves so that they do not mess with each other [7], [8]. Thus, modern wireless transmitters dynamically switch the modulation scheme they use. As a consequence, wireless receivers must automatically recognize what modulation scheme is being used so that they are able to correctly demodulate signals. Therefore, they require automatic modulation classifiers, which have been relying on machine learning [4], [5], [7]-[9].

The VT-CNN2 Modulation Classifier

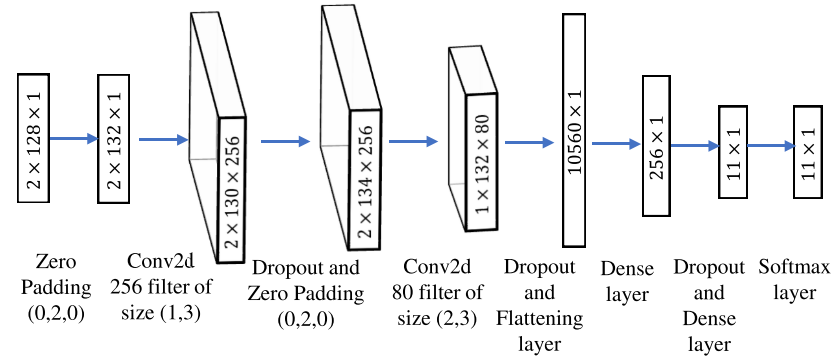

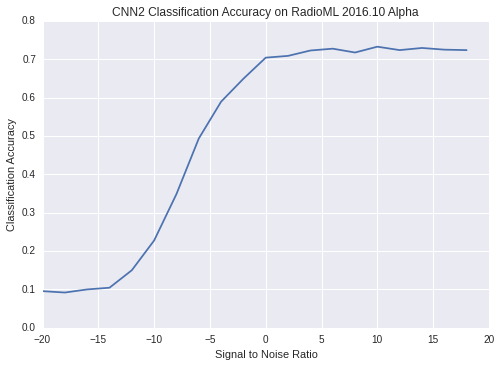

The VT-CNN2 modulation classifier, proposed in [4], [5] is one of the most used classifiers used by works that deal with modulation classification problems. It relies on deep convolutional neural networks and classifies among eleven different modulation schemes. That is, given a sample of the transmitted signal, it identifies what modulation scheme has been used among eleven different possible techniques. Figures 2 and 3 show the VT-CNN2 neural network architecture and classification results, respectively. The signal to noise ratio (SNR) depicted in Figure 3 refers to the amount of noise in the signal so that signals with large SNRs do not have a lot of noise and signals with low SNR have more noise.

Compromising modulation classifiers with adversarial attacks

As discussed in our previous post, machine learning models have been shown to be vulnerable to adversarial attacks. Adversarial attacks introduce specially crafted imperceptible perturbations that cause wrong classification results [7]-[9]. Hence, they may induce modulation classifiers to misidentify the modulation scheme used so that a signal is not correctly demodulated and communication compromised. Thus, despite their good classification results, using machine learning-based modulation classifiers, such as the VT-CNN2, puts into question the security and reliability of wireless communication systems [8].

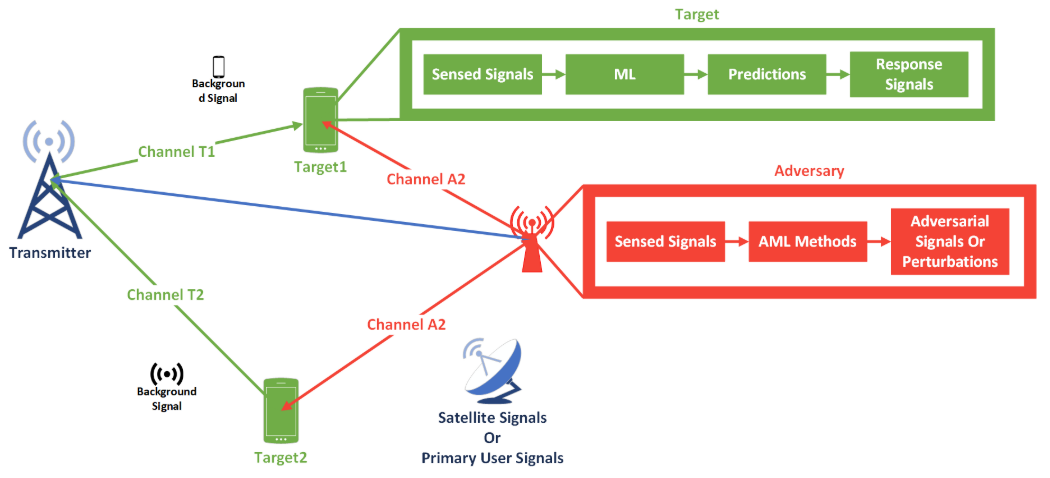

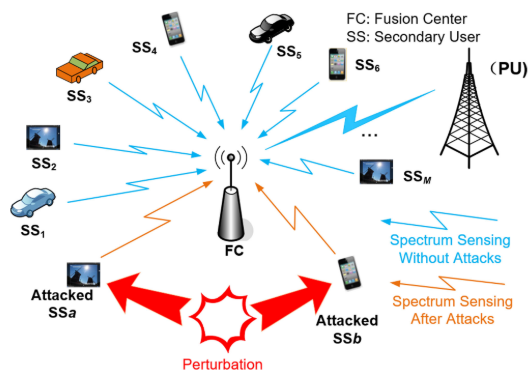

The shared and broadcast nature of wireless communications allows attackers to tamper with signals that are transmitted over the air. Thus, adversarial attacks on modulation classifiers may be launched from malicious transmitters, or from legitimate transmitters and receivers that have been infected and compromised by malware [8]. Figure 4 illustrates an adversary that transmits adversarial perturbations from a malicious transmitter. Figure 5 illustrates that transmitted adversarial perturbations may affect several connected devices, such as machine learning models running on vehicles and mobile phones.

Multi-Objective GAN-Based Adversarial Attack Technique

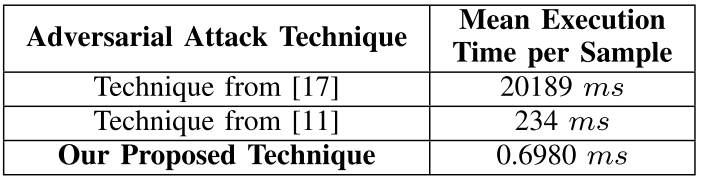

Since adversarial attacks may compromise the operation of wireless receivers, a few research works have been exploiting and evaluating this problem. The work in [8], for example, proposes an adversarial attack technique that significantly reduces the accuracy of modulation classifiers. Moreover, it crafts adversarial perturbations and adds them to data samples received by wireless receivers at least 335 times faster than the other techniques evaluated, which raises serious concerns about using machine learning-based modulation classifiers.

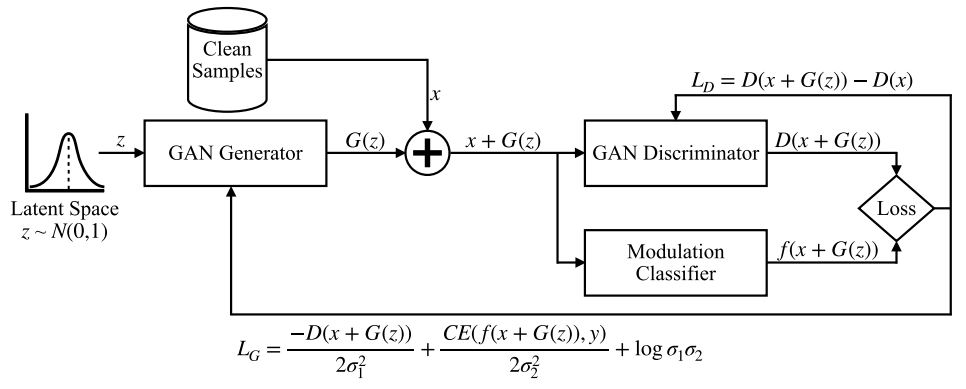

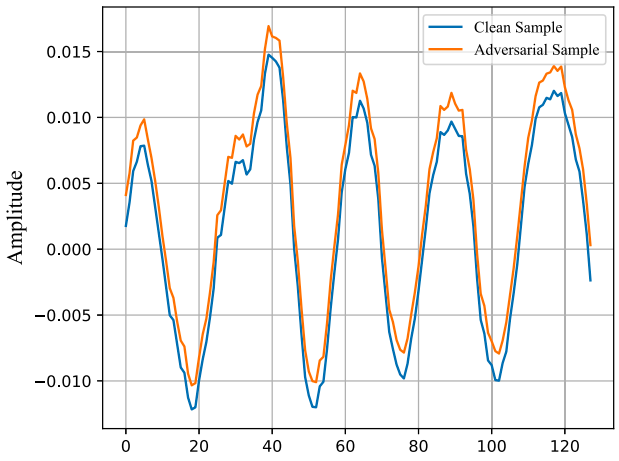

Furthermore, the work in [8] considers that a wireless receiver has been compromised by malware so that their proposed adversarial attack is launched directly on that receiver. They rely on generative adversarial networks (GANs), which is a deep learning framework that trains two competing neural networks: generator and discriminator [8]. The GAN generator learns to produce samples that are similar to those of a training set. The GAN discriminator, on the other hand, learns to distinguish between real samples and samples that have been produced by the generator. The authors of [8] modified the GAN structure so that the generator produced adversarial perturbations that were then used to tamper with the samples received by wireless receiver. Figure 6 shows the GAN architecture proposed in [8]. Figure 7 illustrates the clean and tampered version of a received sample to which an adversarial perturbation has been added. As adversarial samples must not be easily recognized, note that the clean and adversarial samples depicted in Figure 7 are very similar to each other.

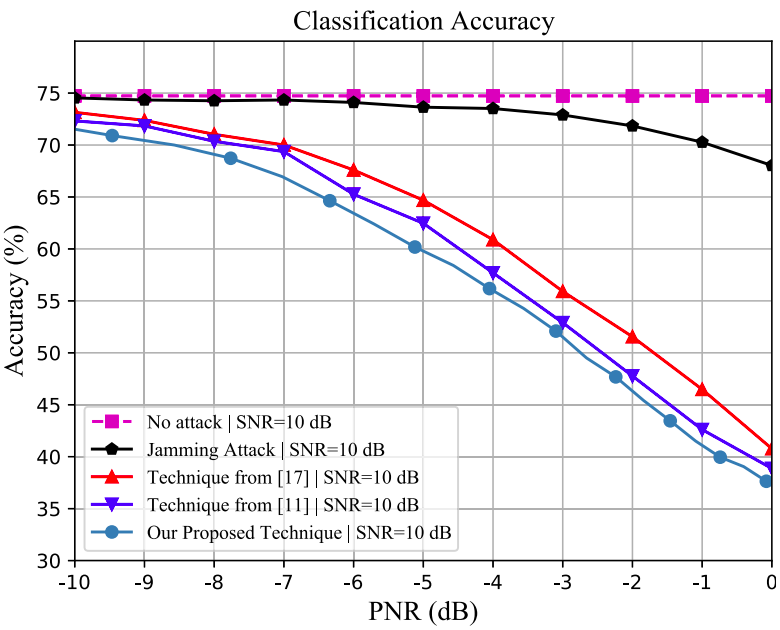

The work in [8] evaluated their proposed adversarial attack on the VT-CNN2 modulation classifier. Thus, Figure 8 shows the VT-CNN2’s accuracy on clean samples and on adversarial samples produced by the technique proposed in [8], two other adversarial attack techniques, and a jamming attack, which introduces a random noise to received samples instead of specifically crafted perturbations. Finally, Figure 9 shows the mean time that each technique evaluated in Figure 8 takes for crafting and adding adversarial perturbations to received samples. The technique proposed in [8] significantly outperforms the other two adversarial attack techniques evaluated by crafting perturbations in less than 0.7 ms.

Conclusion

In this post, we discussed how adversarial attacks may compromise modulation classifiers and potentially interrupt wireless communications. As shown, adversarial attacks represent a major threat whose impact goes way beyond image classification applications. Therefore, it is urgent and necessary to improve machine learning-based systems to make them resistant to such sophisticated attacks. Moreover, since most works on adversarial attacks focus on image classification, more research is still needed to better understand the impacts of adversarial attacks in other fields, such as cybersecurity. At Tempest, we are aware of such threats and developing defense techniques to better protect your business! Stay tuned for our next posts!

References

[1] A. Chakraborty, M. Alam, V. Dey, A. Chattopadhyay, and D. Mukhopadhyay. Adversarial Attacks and Defences: A survey. arXiv preprint arXiv:1810.00069, 2018.

[2] J. Liu, M. Nogueira, J. Fernandes and B. Kantarci, “Adversarial Machine Learning: A Multilayer Review of the State-of-the-Art and Challenges for Wireless and Mobile Systems,” in IEEE Communications Surveys & Tutorials, vol. 24, no. 1, pp. 123-159, Firstquarter 2022, doi: 10.1109/COMST.2021.3136132.

[3] B. Flowers, R. M. Buehrer and W. C. Headley, “Evaluating Adversarial Evasion Attacks in the Context of Wireless Communications,” in IEEE Transactions on Information Forensics and Security, vol. 15, pp. 1102-1113, 2020, doi: 10.1109/TIFS.2019.2934069.

[4] T. J. O’Shea, J. Corgan, and T. C. Clancy, “Convolutional radio modulation recognition networks,” in Proc. Int. Conf. Eng. Appl. Neural Netw. Aberdeen, U.K., Springer, 2016, pp. 213–226.

[5] T. J. O’Shea, T. Roy, and T. C. Clancy, “Over-the-air deep learning based radio signal classification,” IEEE J. Sel. Topics Signal Process., vol. 12, no. 1, pp. 168–179, Feb. 2018.

[6] PadaKuu, “AM Modulation/Demodulation”. Accessed: Aug. 30, 2022. [Online]. Available: https://padakuu.com/articles/images/cover/cover-170826052205.jpg

[7] Y. Lin, H. Zhao, X. Ma, Y. Tu, and M. Wang, “Adversarial attacks in modulation recognition with convolutional neural networks,” IEEE Trans. Rel., vol. 70, no. 1, pp. 389–401, Mar. 2021.

[8] P. Freitas de Araujo-Filho, G. Kaddoum, M. Naili, E. T. Fapi and Z. Zhu, “Multi-Objective GAN-Based Adversarial Attack Technique for Modulation Classifiers,” in IEEE Communications Letters, vol. 26, no. 7, pp. 1583-1587, July 2022, doi: 10.1109/LCOMM.2022.3167368.

[9] M. Sadeghi and E. G. Larsson, “Adversarial Attacks on Deep-Learning Based Radio Signal Classification,” in IEEE Wireless Communications Letters, vol. 8, no. 1, pp. 213-216, Feb. 2019, doi: 10.1109/LWC.2018.2867459.

[10] T. O’Shea, “Modulation Recognition Example: RML2016.10a Dataset + VT-CNN2 Mod-Rec Network”. Accessed: Aug. 30, 2022. [Online]. Available: https://github.com/radioML/examples/blob/master/modulation_recognition/RML2016.10a_VTCNN2_example.ipynb